Fixing model compatibility errors across Tensorflow versions

When moving a model from one system to another the version of Tensorflow may not always be the same which causes a lot of compatibility issues, This article aims to help fix these issues

Errors fixed in this article include:

- ValueError: bad marshal data (unknown type code) while loading model

- ValueError: Unknown layer: Functional

Requirements

- Saved model weights in h5 format

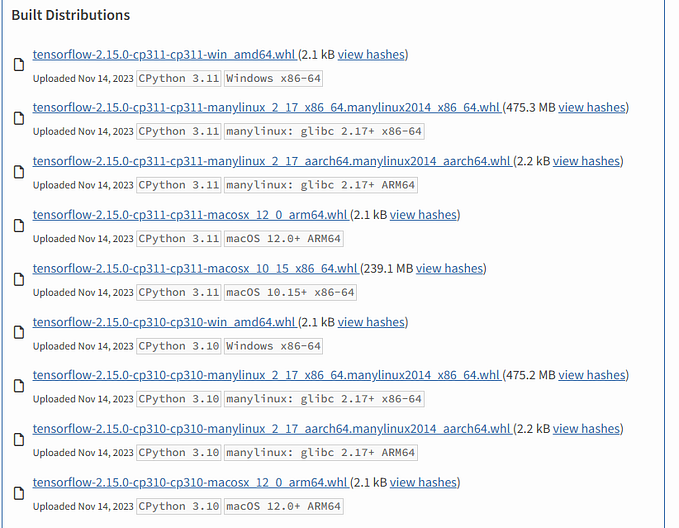

- Tensorflow installed

- Cuda and cuDNN installed if you are using a GPU

Creating the model structure

If you have the code you used to create your model getting the model structure can be done directly. I have show an example of initializing the model structure using the Keras vgg19 with a custom fully connected output layer.

If you don't have your code which you used to create your model it is going to be a little harder as the only solution for these errors is to create the model structure and load the weights into it. I will not go into detail about recreating the model structure as it will vary based on your model. The function below should help you in finding the layers along with their weights used in the .h5 file which will help you in recreating the model.

Loading model weights

We have now created an untrained model and will set its weights with the model you are trying to import using the following line of python code.

model.load_weights(‘Model_you_are_porting.h5’)Note:

If you are trying to import a model that was created on TensorFlow_v1 then run the following command to ensure compatibility.

tf.compat.v1.disable_v2_behavior()that's it hopefully your model should now work without any problems now :)